Phase 1: Obtaining the Data

As the photos above suggest, the published volumes are an OCR nightmare, if not a complete impossibility. Luckily, however, much of the summary information contained in the printed volumes has already been digitalized and compiled on a website under the leadership of Jürgen Sarnowsky, a preeminent scholar of the Teutonic Order in Prussia at the Universität Hamburg.

Sarnowsky and his team conveniently organize their website into links labeled by year, ranging from 1140 all the way up to 1525 (the year of the Order’s secularization in Prussia) and beyond. Each year’s page features a list of documents published in the PrUB organized chronologically.

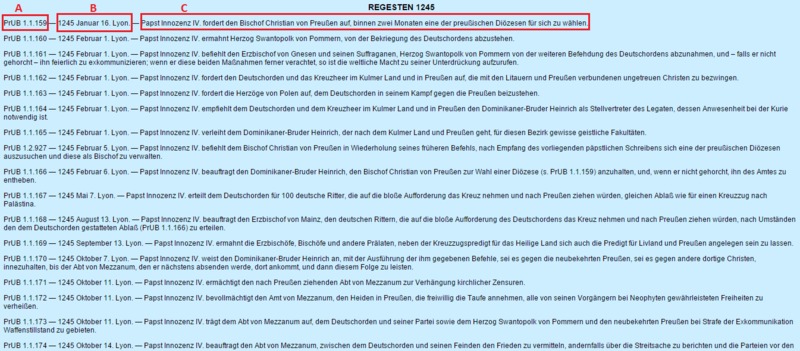

The screenshot to the right is the page for the year 1245. The website has compiled the information provided for each individual document in the printed volumes, including its identification number in the published volume (labeled A), the date and place (B) of origin, and a brief description of the document’s contents (C).

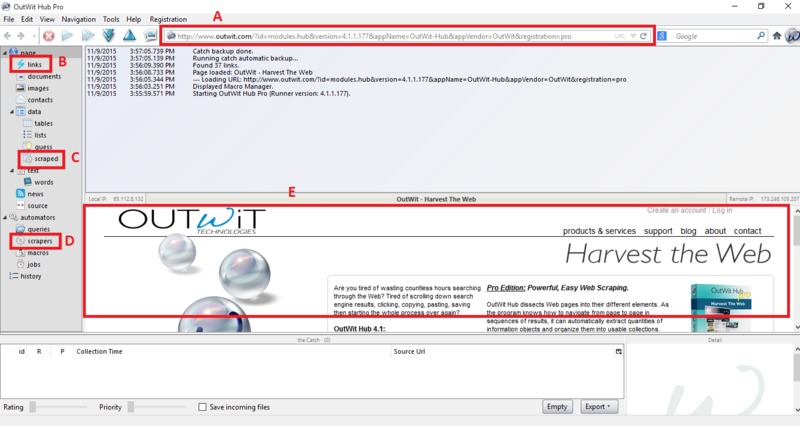

Lucky as it is that this information is already conveniently digitized, there are two problems. First, there is the problem of how to convert this HTML data into a useable format that I can compile into my own database (“datamining”). To tackle this, I used the scraping program OutWit Hub (limited trial version available for free download), a batch downloader which uses simple programming to convert HTML into a useable format (like CSV).

It works like this:

- Enter the link you want to datamine in the search box (labeled A). The website’s contents will appear in the box below (E).

- It is possible to download in batches using the “Links” tab (B), as demonstrated later.

- The main trick is in designing a “scraper” (D) which uses basic programming to play on patterns within the HTML

- All “scraped” data can be accessed and exported to a user-friendly format (C).

The second problem will come back to haunt us later. For now, here is a walkthrough of the steps to take in datamining the Virtuelle Preußisches Urkundenbuch.

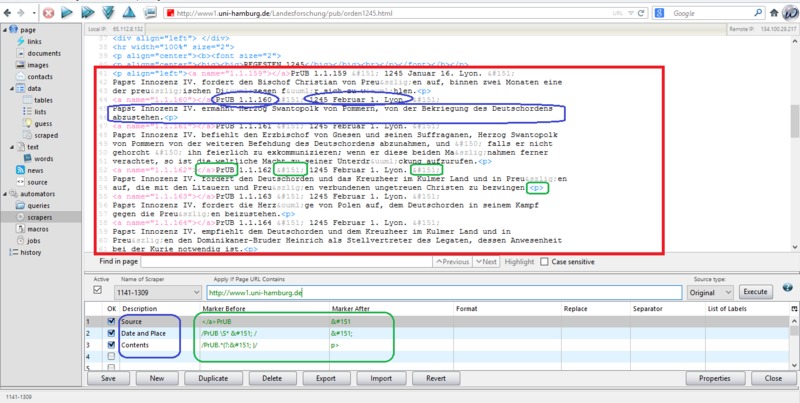

To design a scraper, we need to first identify the desired information as it is formatted within the HTML script. Take the earlier example of the year 1245. Using the interface under the “scrapers” tab, enter the URL for 1245, which will display the HTML code below as seen in the red box. Circled in blue are the elements to be datamined for each entry: the source, the date and place, and the contents description.

The next step is to find consistent patterns of how these desired elements are embedded in the code. For the year 1245 (and, as it turns out, from 1140 up to 1309), the patterns are fairly simple—they are circled in green. Each entry starts with code that ends in </a> immediately followed by “PrUB” and the number of the document within the volume. Then there is a dash—encoded here as “—”—followed by the date and place. Finally, there is another dash which begins the contents description, and finally each entry ends with <p>.

This scraper is pretty simple to design. There are three components for each of the elements identified above (Source, Date and Place, and Contents), circled in blue. The user tells the program how to identify these elements by consistent markers before and after—the following two columns circled in green. It takes a lot of trial and error to perfect the scraper, and some patterns require more complex markers than others. In the example above, there is a mixture of basic characters and regular expressions (identified with the / symbol).

Once the scraper is ready, it’s time to put it to use.

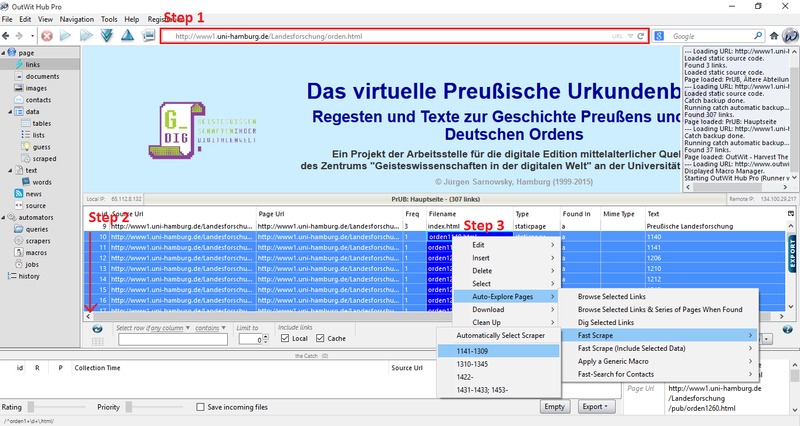

Step 1: Proceed to the “Links” tab, then type in the URL for the page containing all the links to each year, because this will allow batch-mining from multiple years at once.

Step 2: In the list below, highlight all relevant links (in this case, responding to years).

Step 3: Right click, then use the drop-down menus to select and click the appropriate scraper. This will run the scraper on the highlighted links and fill a table with freshly mined data.

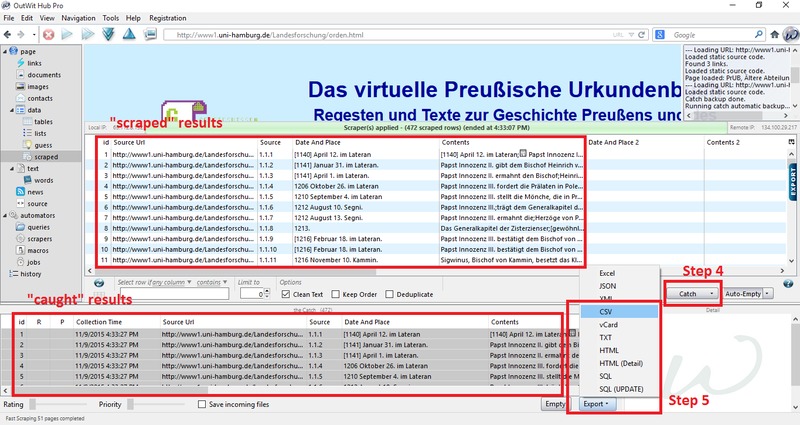

Proceed to the “scraped” tab and marvel at the results. Then, take a look through them to judge whether the scraper worked properly or not. If it did not, there are some design flaws to work through.

Step 4: If the results look good, the “catch” button will take the results and store them in the box at the bottom.

Step 5: Finally, the “caught” results can be exported to the desired format. In this case, I used CSV format.

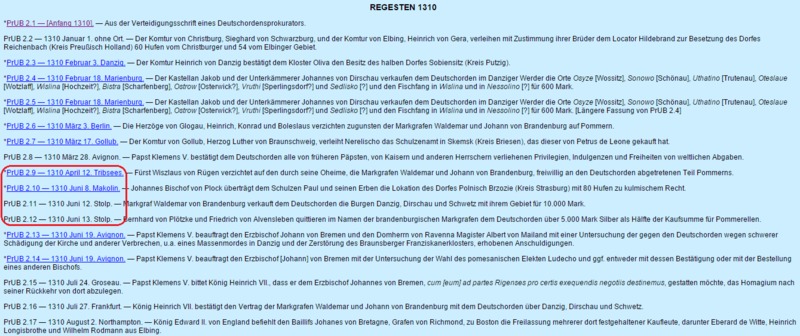

This brings me to the second problem I alluded to earlier. My first scraper worked well until the year 1310, when the formatting of the HTML suddenly became much less consistent.

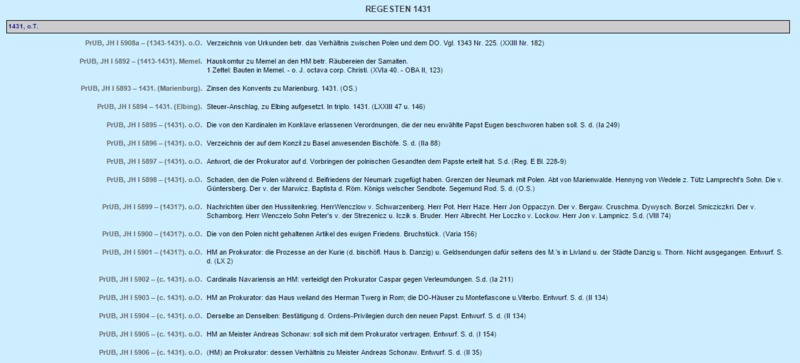

For example, the year 1310 (to the left) includes links for some of the entries which lead to individual pages with varying amounts of information about that source. The page for 1431 (below), moreover, features a completely new format. This new format has variations of its own, and is used inconsistently for the later material.

Other minor inconsistencies, like use of a hyphen to separate information instead of a longer dash, complicates the demands on the scrapers’ sophistication. For the purposes of the project, I was able to retrieve data consistently from 1140-1345, then from about 1382 to 1449. The formatting for the pages between 1345 and 1382 are quite complicated and inconsistent. And to make matters worse, the digital Urkundenbuch is itself inconsistent in the years it includes.

Overall, I was able to datamine information from about 5,000 sources across roughly three centuries. There are a few observations to make at this point. First, the resulting gap in my data between 1349 and 1392 does not undermine the whole project, but will certainly be a factor in ultimately interpreting the results. Similarly, 5,000 documents is only a fraction of the documentary evidence produced (and the evidence surviving) during the period. Still, it accounts for most of the published material, making it a rich amount of data to start experimenting with. Finally, it is worth repeating that this is data from published volumes compiled and edited by several generations of German scholars. It is a great privilege for modern historians to build off the intensive efforts of their forerunners, but it is imperative to avoid treating this data as “raw.” Instead, it bears the imprint of these past historians—not only the fruits of their erudition, but also the idiosyncrasies, inconsistencies, and possible errors of their methods. Altogether, these underlying problems illustrate some of the key challenges in “digital” history.