Methods and Tools

This project began with two objectives:

- to give a better overview of the circulation of key texts in a manner in order to provide a reference collection for scholars as well as comprehensible overview for nonspecialists

- to analyze the texts themselves to better identify whether they should be considered astrological, astronomical, cosmological, etc.

Its underlying motivation is to make information about medieval astral scientific ideas both more accessible and more rigorously defined. Current scholarship on the astral sciences in medieval Europe suffers from a steep learning curve with fairly basic material, which prohibits its far-reaching conclusions from being meaningfully incorporated into broader scholarly discussions. This project aims to overcome that gulf by providing a visual rendering of the data collected in a way more intuitive than a chronological narrative of who wrote or translated which text and where.

Moreover, the scholarship is markedly ambivalent about which texts, or what content of texts, is relevant to discussions of the history of mathematics, science, pseudoscience, magic, and various other intellectual developments. This project aims to provide a more objective rubric for evaluating those sources by quantifying the frequency with which key words—such as astrology, astronomy, magicians, or mathematicians—are employed.

Analysis of these texts' metadata and word content is still ongoing, but will likely lead to insights that emerge from unexpected patterns. At this stage, the project's guiding questions are:

- What meaningful correlations can be drawn between a text’s circumstances of production (its author, language, date and provenance, purpose and format) and its contents, specifically its stance on the astral sciences?

- What are some significant ideological differences among the contents of texts intended for various audiences, and how can scholars discuss those differences with a more objective rubric elucidated by a quantitative analysis of a text’s vocabulary?

- Is “distant reading” of technical medieval material, based on data drawn from a substantially truncated historical record, feasible?

Building a Database

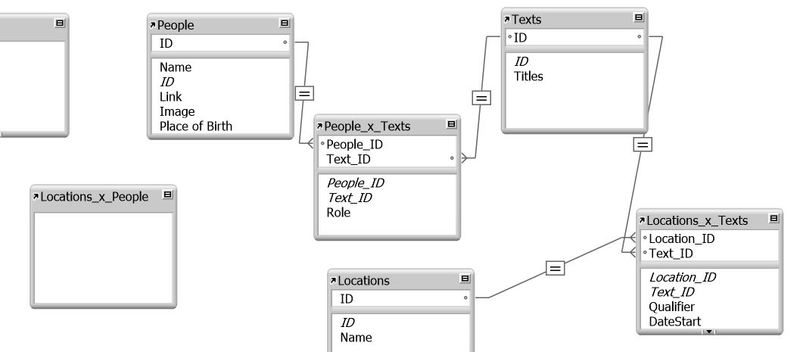

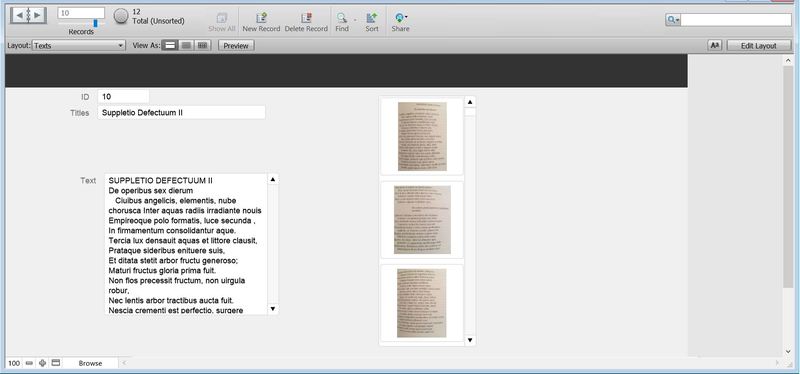

The project's first objective resulted in a relational database built with FileMaker Pro (version 13), which linked texts with locations and authors/translators/commentators. FileMaker's layout allowed me to create records for each of my items (for example: texts, people, and locations), to link certain characteristics of those items (for example: locations, or whether a person was a text's author, commentator, or translator). On the records for each text, I created "portals" to see the text's contents (displayed as plain text) alongside the images which were OCRed to obtain the plain text. This will allow me, or anyone who has access to the database, to correct OCRing errors by painstakingly comparing the OCRed plain text with the original image.

However, I realized that any meaningful conclusions I might draw from that database would lack substance without a better understanding of the ideas being transmitted, which required analyzing the texts. If the heart of the project was the texts themselves, then my second objective should become my primary focus.

Building a Readable Corpus

The project's second objective of analyzing the texts required obtaining print copies of Latin texts, rendering them useable with OCR software, and cleaning up the OCR output using regular expressions in order to run the "stripped-down" plain text through various programs that facilitated “distant reading” (see the section immediately below if you're unfamiliar with this method).

For reasons that are explained elsewhere, this part of the process took significantly longer than expected. However, because the project's purpose was to work intensively with the texts themselves (rather than with their metadata or other information), there were no alternatives other than to tediously generate "clean" text data, often by hand. The resulting corpus will continue to grow, however slowly, and I expect significantly meaningful results to be attainable within a year.

Distant reading

In 2005, Franco Moretti and the Stanford Literary Lab introduced the concept of distant reading, or a way of comprehending the meaning of a large body of text through quantitative analysis rather than through a tradional, subjective "close" reading of it. Taking eighteenth-century British literature as an example, Moretti argued that "a field this large cannot be understood by stitching together separate bits of knowledge about individual cases, because it isn't a sum of individual cases: it's a collective system, that should be grasped as such, as a whole" (Moretti, 4).

Although the method of distant reading received significant scholarly and popular pushback in subsequent years, I found the distant reading practice to be extremely useful for this project. If the large body of work that I am interested in-- Latin treatises on the astral sciences, numbering easily a few hundred from the high and late medieval period-- has been categorized according to scholars' narrow discipline-specific interpretations of the text's contents, it seems worthwhile to return to the texts and allow them to speak for themselves-- to as great an extent as is possible.

However, in this project, the subjective interpretations resulting from close reading map onto the objective parameters of distant reading, using my own subjective, but informed, judgement. Whereas Moretti has suggested that distant reading replace rather than complement "close reading," I posit that distant reading of the corpus analyzed in this project will likely produce a better understanding of the major themes in these texts. Meanwhile, close reading of the individual texts will establish the particular associations among the astral sciences (primarily astronomy, astrology, and cosmology).

Close Reading

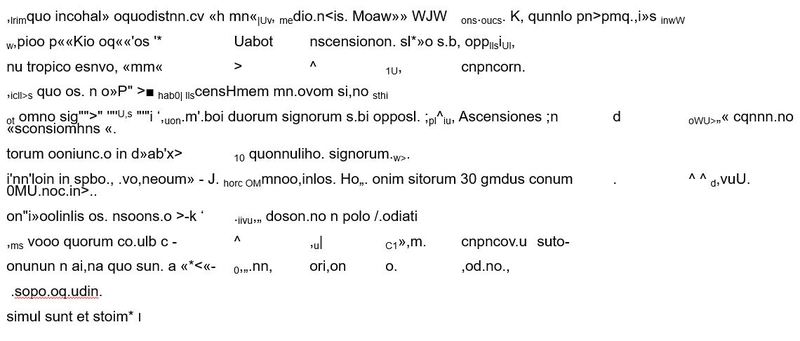

Because the substance of the relatively small number of texts I have is so critical to the project, I dedicated the majority of this semester's project to establishing a database of clean OCRed texts. While I was able to gather a number of useable images using my smartphone's camera and ABBYY OCR technology, certain Latin ligatures and abbreviations did not register (see image 1 at left). I painstakingly replaced any resulting gibberish with readable text using old-fashioned ocular transcription.

Because Latin is a highly customizable language and varies greatly from author to author, I found "close reading" to be indispensible to the project. Each of the authors in this dataset deployed different Latin syntax, which is overlooked by distand reading methods, and so I have found that a balance of both reading modalities has provided the best insight into this particular dataset. With such a small number of texts of such substance, it has been illuminating to encounter each text individually as well as within its larger group context.

Incidentally, in the process of "closely reading" the dataset, I encountered a word which was unfamiliar to the Latin scholars I asked to look at it: "hee." As shown in image 2 at left, the word is likely a elision of "hae" rather than a misspelled "haec."

Text Analysis and Topic Modeling

Topic modeling can help researchers to find meaningful patterns within a sufficiently large corpus of printed sources, and has been described as as “a method for finding and tracing clusters of words (called “topics” in shorthand) in large bodies of texts.” I have attempted to make use of various topic modeling programs, such as the Zotero plug-in Paper Machines and the stand-alone Mallet. Rendering the text amenable to topic modeling, however, required a large number of manhours to "clean up" OCR text (such as the gibberish picutred above), so with only a dozen useable sources to work with, I have had limited success in finding meaningful patterns thus far.

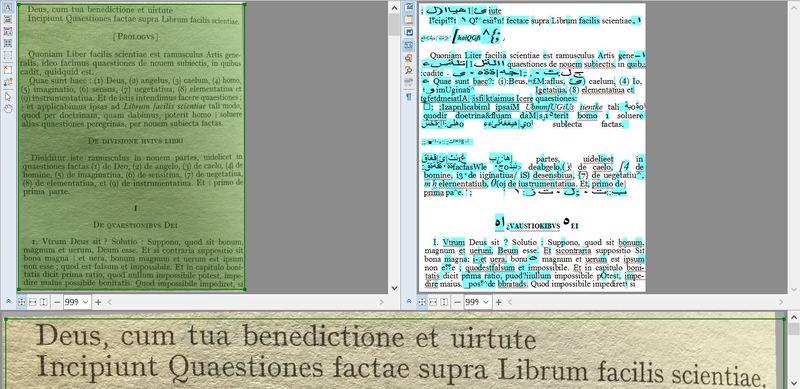

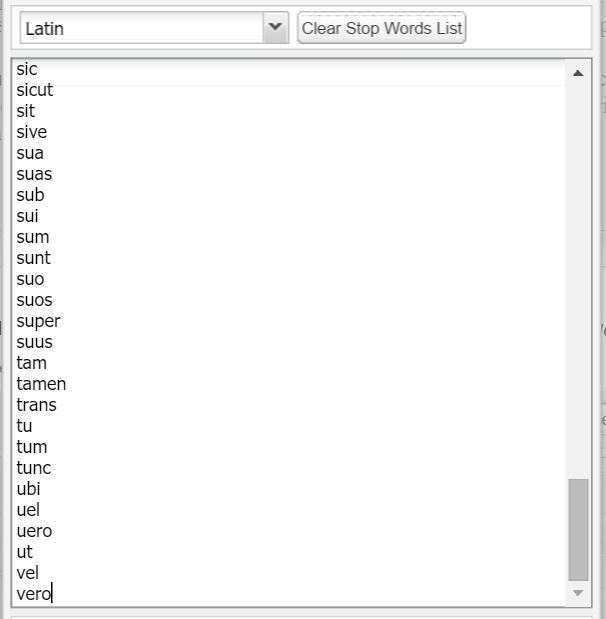

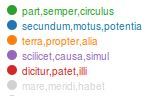

One critical component of text analysis is directing the analytical tool to focus on only the useful or meaningful words, which is usually accomplished by providing a list of "stopwords" for the program to skip over when generating its output. While most text analysis tools provide pregenerated lists of stopwords for common languages like English, only very partial lists were available for Latin. As a result, I had to continually augment the list of stopwords as I added new texts (and remember to add each of a word's declined Latin forms; for example, hic, haec, and hoc). I found that Voyant Tools was more responsive to eliminating stopwords than Paper Machines, so I used its analysis of the corpus' vocabulary to create a baseline for further research questions (see the first image on the left).

It is worth emphasizing that topic modeling programs can help researchers to find meaningful patterns, and also that those patterns are most apparent in a sufficiently large corpus of printed sources. Programs such as Paper Machines are far from plug-and-play tools, so experimenting with the number of topics it detects (50? 130? 18?) as well as the types of clusters it finds (most common? most coherent? most variable?) will render different findings overall. Moreover, these findings are most apparent on datasets much larger than the one I have built thus far.

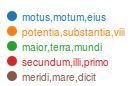

As shown in the third and fourth images on the left, Paper Machines found few meaningful patterns in the corpus of only a dozen texts. When grouped into 50 topics according to most common occurance, the top two topics dealt with motion and substance. These may be helpful to think about the ways in which "scientific objects" from the celestial realm were formed. When grouped into 70 topics according to the most coherent occurances, we see that circles, motion, the earth, and causes form the top four topics. As the corpus is augmented in the coming months, I expect to see further clarification among these topics, hopefully to a degree that will be useful in the project's second objective: to better identify whether the individual texts should be considered astrological, astronomical, cosmological, or of a different category altogether.