Text data

The newspapers were acquired in Portable Document Format (PDF). As the resolution of the original PDF files are low, text recognition either by software or the human eye would be a challenge. In total, there were 534 issues from July 1948 to July 1950 to be analyzed for the project, not including six missing issues. Each issue contained four-to-six pages with the text written in the Korean language largely with North Korean terminology and orthography, in addition to Chinese numerals.

Two of the leading OCR engines in the market were used to automate the text recognition process. The first was Adobe Acrobat, which provided consistently inaccurate readings and outputs laden with symbols, making it unsuitable for any post-OCR manipulation. The second was ABBYY FineReader, which provided superior recognition compared to Adobe Acrobat, yet still provided far from usable output.

Many challenges prevented the use of digital OCR. First, the scan quality of the PDF originals were too low, even for the human eye to recognize in some cases. Second, the low production quality of the newspaper itself rendered the content misaligned, leading to the OCR engines misrecognizing articles from other articles and even text from image. Third, the top-to-bottom, right-to-left text layout of Korean text seems to have played a role.

In any case, the article headlines were manually typed, initially on Microsoft Excel spreadsheet. Attached to each article headline were a number of metadata including the author's name if available, page number, issue number, date, and a unique ID number. Non-text articles such as photos, illustrations and songs were also included in the list. All in all, more than 10,700 articles were found in the two-year period, with the headline text including more than 47,000 words.

Based on a trial run on a number of text analysis software, Korean text was either unusuable or limited in functionality. One of three transformations were necessary in order to fully utilize the Korean language data for textual analysis. First, the text can be translated to English, but this would be the most time-consuming option. Second, the text can be parsed using methods developed for Chinese or Japanese texts, in order to be used on text analysis software compatible with Korean. The third option is to parse the text manually using regular expression.

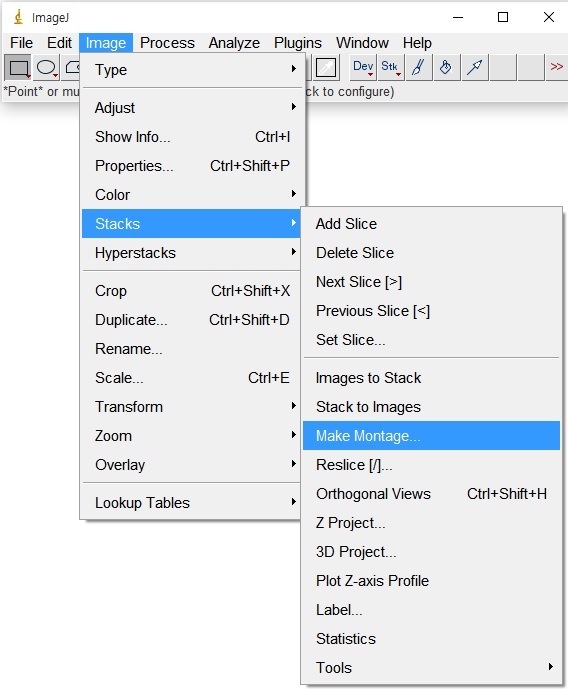

The third option of manual parsing with regular expression was taken for this project. Regular expression and EditPad software were used extensively for this purpose. Although there is no preexisting standard procedure or software for breaking down Korean text into morphemes for use in text analysis, I established some general guidelines in order to maintain internal consistency. The following five steps were taken to convert "raw" Korean text to morphemes:

- Isolate relevant headlines

Out of more than 10,000 headlines, only those relevant to my research goals were selected. The regular expression ^.*\b(.*keyword.*)\b.*$ was used to select an entire line of headline that contained the keyword. - Remove unnecessary symbols and numbers

Symbols and arabic numerals were deemed generally devoid of informational value in this newspaper. Regular expression aided the easily selection of all symbols and numbers to be removed from the selected headlines. - Break text down to morphemes

Various particles in the Korean language, those adding little or no meaning to the rest of the word, were removed using regular expression. Adjectives and verbs were converted to the respective standard form. - Remove stop words

Words of little informational value, such as "this," "who," among many others were removed from remaining text, again using regular expression for the sake of efficiency. - Add spaces where appropriate

When the headline text was originally extracted, I made the mistake of being careless about consistent spacing between words. To rectify, various tools, including a helpful glitch in Google Translate that automatically added spaces to Korean text, were experimented with to no avail. This was by far the most laborious process requiring case-by-case judgment. Proper nouns were among the easiest to change, since names, for instance, would have no issue taking a space afterward in any situation. Without regular expression's ability to select and modify multiple words at once, this may have become a prohibitively time-consuming task.